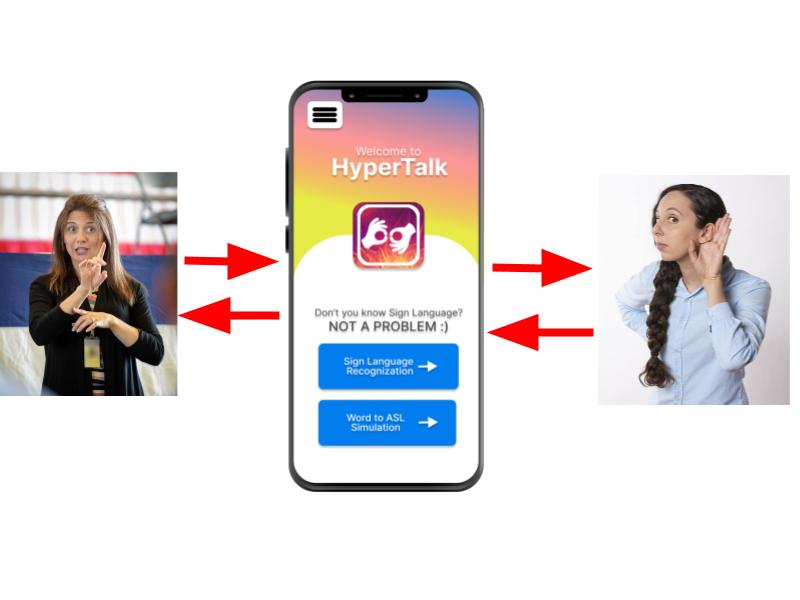

Real-time bidirectional sign language translating AI for deaf and speech-impaired individuals

Problem Definition and Solution Overview

According to the World Health Organization (WHO), there are more than 400 million people worldwide with deafness and speech impairments. However, it's not just them who need a solution. When considering their friends, family members, and neighbors, it becomes evident that they all require some form of application to facilitate proper communication.

Deaf and speech-impaired individuals rely on sign language for daily communication. Learning this language or translating it into spoken language is a challenge that we must address for future generations.

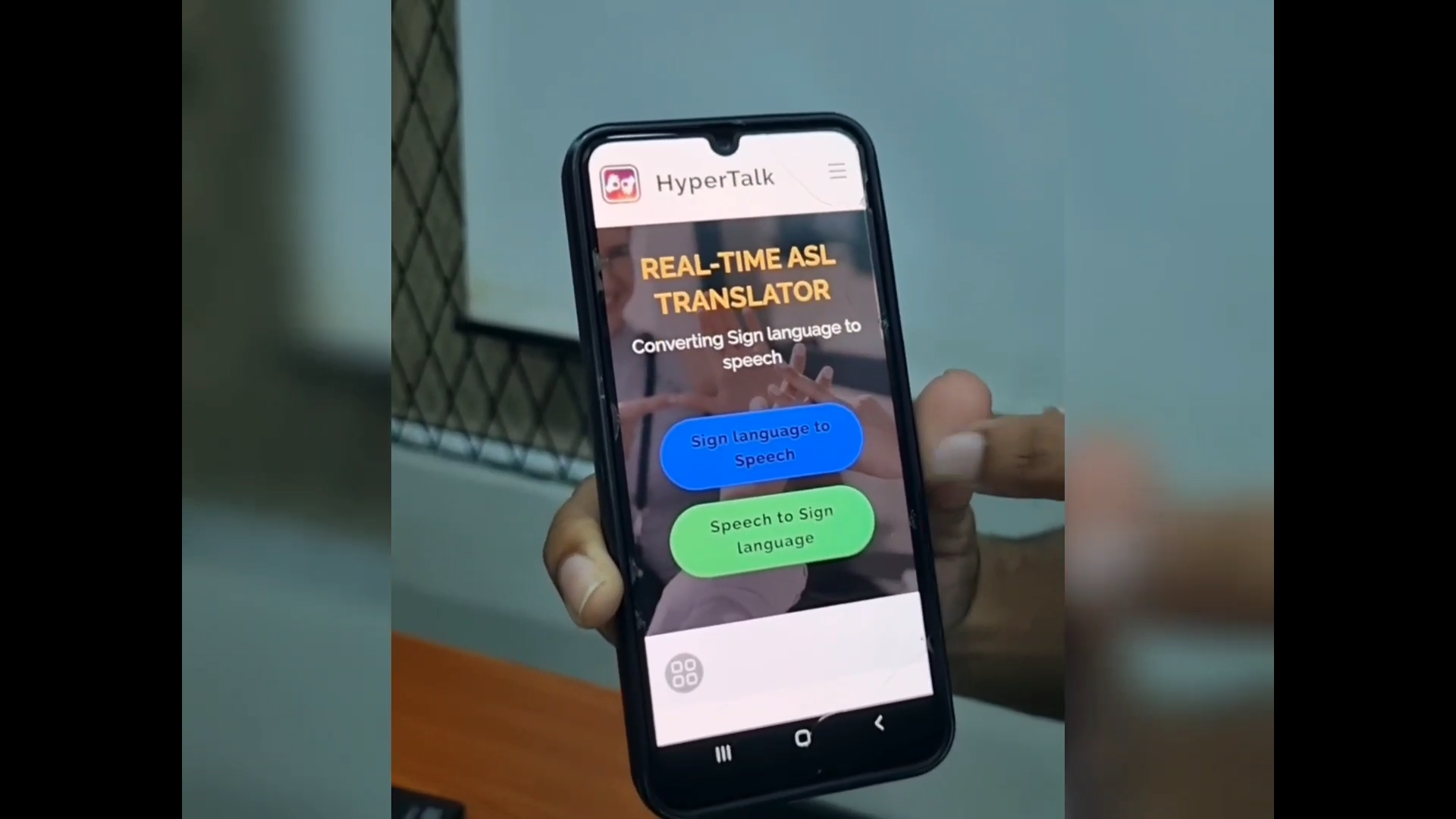

Our solution is a Real-time bidirectional sign language translating AI tool available as a mobile app and website, designed for deaf and speech-impaired individuals worldwide. It is capable of real-time translation in both directions:

1] Sign language phone camera feed to Voice.

2] Voice feed to sign language animations.

To achieve this, we are developing multiple deep-learning models to improve accuracy and reduce the Word Error Rate (WER). Our goal is to create a more accurate and practical product in the near future, continuing to positively impact the lives of those who rely on sign language for communication.

Implementation

The development of our product, a real-time bidirectional sign language translating AI tool, will utilize state-of-the-art technologies. We will employ our proprietary deep neural network for continuous sign language translation. This network will be trained to interpret sign language from the phone camera feed and convert it into voice in real-time.

For the translation of voice to sign language, we will use speech recognition APIs to transcribe voice input into text. This text will then be translated into sign language animations using our deep learning models.

Our approach to implementation will be iterative, with continuous testing and refinement to enhance the performance of our models. We will also focus on user experience, ensuring our product is accessible and easy to use.

Our deployment strategy will involve suitable testing to ensure proper integration with existing systems and platforms. We will also implement a phased rollout plan to effectively manage the launch and promptly address any issues that may arise. Our aim is to have the product ready for launch within the specified timeline, thereby making a positive impact on the lives of those who rely on sign language for communication.

We are planning to release our app with the following Sign language options. Therefore trained our deep neural networks with the following Video datasets in different sign languages including different regions in the world.

For initial training following data sets are used;

1] Phoenix 2014 Dataset (German Sign Language Videos)

https://www-i6.informatik.rwth-aachen.de/~koller/RWTH-PHOENIX/

2] OpenASL Dataset (American Sign Language Videos)

https://paperswithcode.com/dataset/openasl

3]CSL Dataset (Chinese Sign Language Videos)

https://ustc-slr.github.io/datasets/2021_csl_daily/

4] BOBSL Dataset (British Sign Language Videos)

https://paperswithcode.com/dataset/bobsl

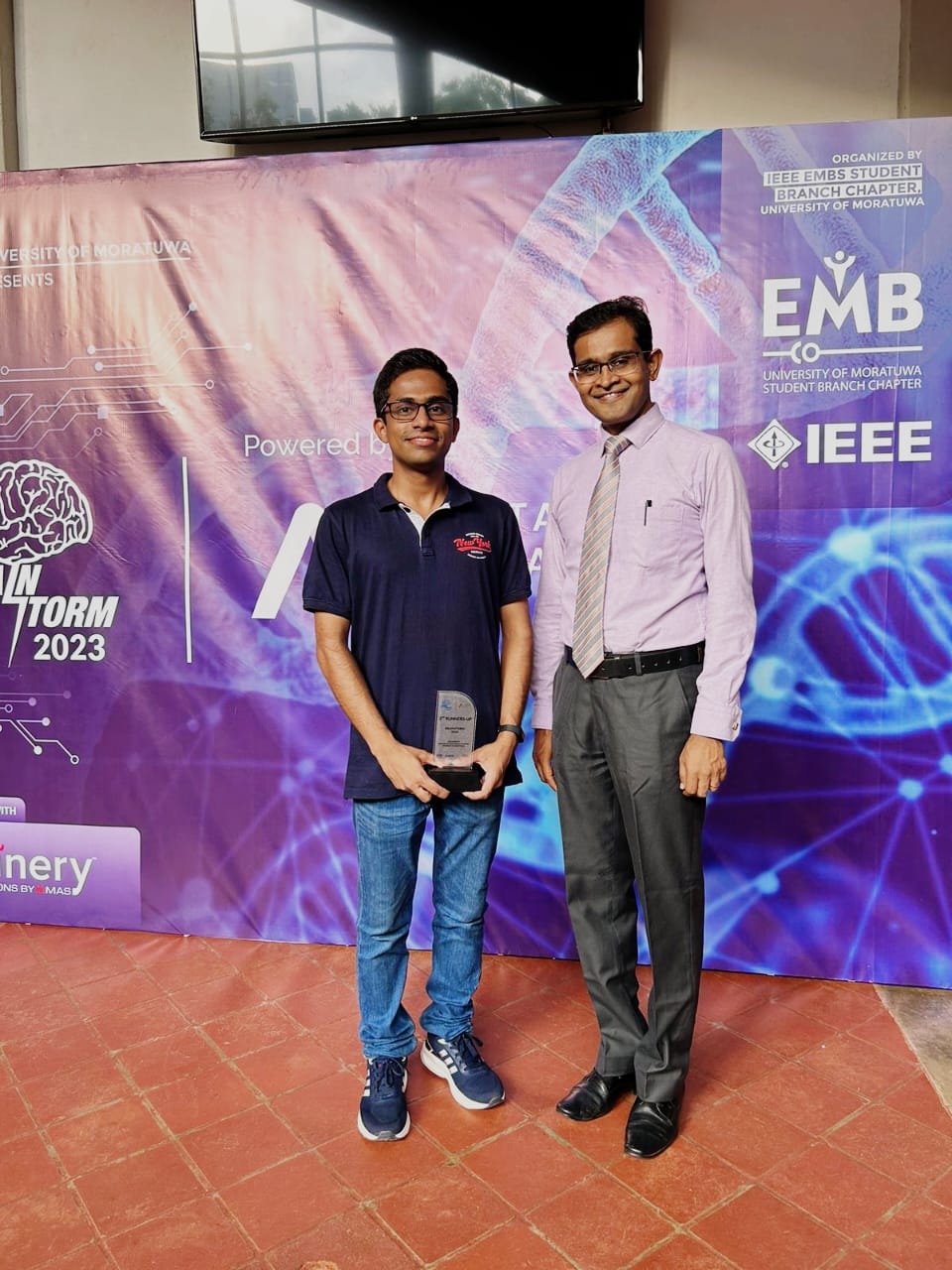

Team Members;

1] Hasitha Gallella

2] Sandun Herath

3] Kavindu Damsith

4] Janeesha Wickramasinghe

5] Malitha Prabashana